Program Team Statement

This issue of our periodic bulletin, “Safe Voice – Accounts Under the Microscope,” is published amid the continued escalation of hostile discourse in the Sudanese digital space, accompanied by increasing attempts to normalize hatred and turn it into a part of daily interaction on social platforms.

Through this documentary and analytical effort, the Safe Voice program team seeks to contribute to building collective awareness of the danger of such discourse—not only as a linguistic phenomenon but also as an active tool in producing both symbolic and physical violence within society.

We believe that confronting hatred begins with understanding, monitoring, and analysis before it extends to awareness, advocacy, and policymaking. Therefore, this bulletin is committed to providing a precise and objective reading of what is published in the public sphere, free from prejudice, while maintaining the highest standards of professionalism and respect for users’ privacy.

Our ultimate goal is to help transform the digital space into a safer and fairer environment that reflects the values of dialogue, diversity, and citizenship, paving the way for a new generation of users aware of their responsibilities toward the words they write or interact with.

Safe Voice Program Team

KADN Center for Justice and Human Rights – October 2025

Introduction

The bulletin “Safe Voice – Accounts Under the Microscope” continues its monthly publication as a monitoring and analytical tool aimed at understanding the patterns of hate speech in the Sudanese digital space and tracking the evolution of its forms and trends across the most used social media platforms.

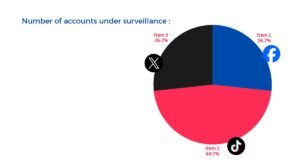

This issue relies on both qualitative and quantitative analysis of thirty active accounts on TikTok, Facebook, and X (Twitter), selected based on their influence level and audience engagement.

This edition represents a new step in the efforts of the Safe Voice program to follow the dynamics of hostile discourse online as an indicator of the social and political state Sudan is experiencing amid prolonged crises. Digital content is no longer merely a reflection of reality but an active force that reshapes attitudes, influences public sentiment, and sometimes fuels aggression and societal division.

In this issue, we highlight the linguistic and rhetorical shifts observed during the monitored period and provide a precise digital reading of engagement levels with inciting content, along with qualitative analysis showcasing the rhetorical characteristics and narratives used by hate producers to influence public opinion. The issue also includes practical recommendations and proposed policies to counter this type of discourse through a comprehensive approach considering legal, social, and awareness dimensions.

This bulletin reaffirms KADN Center for Justice and Human Rights’ commitment to its responsibility in promoting digital justice and combating discrimination and hatred, believing that cyberspace can be a field of construction and understanding—not destruction and division.

Methodology

The Safe Voice program team prepared this issue of “Accounts Under the Microscope” using a mixed qualitative and quantitative monitoring and analysis approach to examine hate speech across social media platforms, including TikTok, Facebook, and X (Twitter).

Thirty active accounts were selected according to specific criteria that considered engagement level, type of content, and the frequency of using inciting or discriminatory linguistic patterns. The monitoring relied solely on publicly available data, without any interference with user privacy or access to restricted content.

The analysis included classifying accounts by platform and discourse type (ethnic, political, or dangerous incitement) and identifying the most common linguistic and rhetorical features such as generalization, dehumanization, and explicit or implicit calls for violence.

Digital analysis tools were used to estimate the level of engagement (comments, likes, shares) and correlate them with the prevalent discourse patterns, supported by manual review to ensure accuracy and objectivity.

This bulletin adheres to ethical standards of monitoring—it aims for understanding and analysis, not defamation—and uses data to support advocacy and public policy efforts to build a safer and fairer digital environment.

General Analysis and New Shifts in Discourse

Patterns of Discourse During the Monitored Period

Analysis of the documented content of the thirty monitored accounts showed the continued dominance of hate speech characterized by polarization and aggression, with some stylistic and thematic changes compared to previous editions. This period was marked by an increase in symbolic and disguised incitement, alongside a relative decrease in direct calls for violence, indicating a tactical evolution among some digital actors in how they deliver hostile messages without violating platform policies.

The following patterns were the most frequent:

1. Generalization and Collective Labeling

Many accounts used language that assigned responsibility for individuals’ actions to entire groups, portraying ethnic or political communities as “traitors” or “internal enemies,” reinforcing a binary division of society between “patriots” and “agents.”

2. Incitement to Violence and Exclusion

Although explicit calls for direct violence declined, veiled forms spread widely—such as calls to “cleanse” certain areas or “uproot the corrupt.” These expressions perform an indirect inciting function, normalizing aggressive behavior.

3. Dehumanization and Demonization

Descriptions that stripped targeted groups of their humanity—comparing them to “cancer” or “germs”—were repeatedly observed. Such expressions justify verbal and physical violence against them and turn victims into “legitimate targets.”

4. Invocation of Historical and Vengeful Narratives

Some rhetoric relied on exploiting collective memories of past conflicts to revive feelings of revenge or moral justification for current violence—linking the past with the present and rebranding hatred as a patriotic or moral duty.

5. Politicization of Identity and Merging Ethnicity with Political Loyalty

Language equating geographical or tribal belonging with political allegiance prevailed, depicting certain ethnic groups as “affiliated” with a specific political or military faction. This deepens the danger of hate speech by transforming political conflict into an identity-based one.

These patterns confirm that hate speech in the digital space has evolved beyond direct aggression into an organized and influential communication system that reshapes public awareness and fuels societal divisions in more complex and effective ways.

Digital Data and Statistics

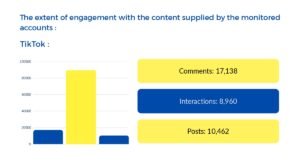

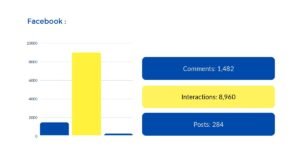

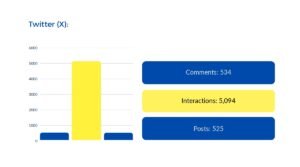

This section presents the quantitative results of monitoring thirty accounts on TikTok, Facebook, and X (Twitter), focusing on the number of followers, likes, and engagement levels with content that included patterns of hate speech.

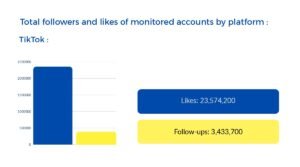

Data shows a significant increase in engagement on TikTok compared to other platforms, reflecting its visual nature and the speed at which its content spreads. Facebook recorded qualitative engagement through group discussions, while X ranked third in volume but had a stronger influence on public debate.

Table: Distribution of Monitored Accounts by Platform

Table: Total Followers and Likes for Monitored Accounts

In total, the monitored TikTok accounts recorded over 23 million likes—an indicator of the wide reach and prevalence of hate speech through short visual media.

Table: Engagement Volume with Monitored Content

Analytical Observation:

The table shows that TikTok clearly dominates all three engagement indicators, accounting for over 75% of total recorded activity. This confirms that short visual content has become more influential and widespread than textual posts or long discussions.

Summary of Digital Data Analysis

-

TikTok as the Main Driver of Hate Speech Dissemination: The platform combines rapid spread with emotional impact, making it the most sensitive space for amplifying hostile narratives.

-

Facebook Retains Its Organizational Role: Despite lower quantitative metrics than TikTok, group discussions and shares in closed communities reinforce the cumulative influence of hate speech.

-

X (Twitter) as an “Opinion-Shaping” Platform: Dominated by political and media elites, it plays a role in reproducing political narratives on a wider scale.

-

The Gap Between Quantitative and Qualitative Engagement: The massive numbers reflect not only reach but also the nature of “mass diffusion” of hateful content, often without critical awareness or accountability.

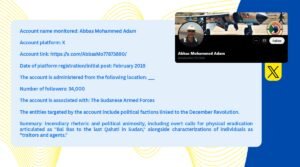

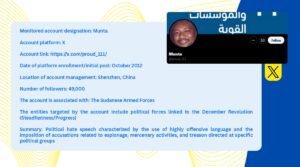

Monitored Accounts in the Bulletin

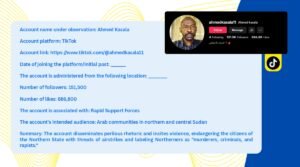

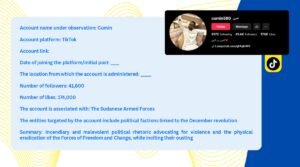

This section presents the detailed results of the monitoring conducted by the Safe Voice program on thirty active digital accounts distributed across TikTok, Facebook, and X (Twitter).

Each profile was prepared based on publicly available data, without interference in digital privacy, and includes:

-

Account name and platform link.

-

Number of followers and likes (if available).

-

The entity or group the account represents or supports.

-

The groups targeted by its discourse.

-

A qualitative summary describing the nature of the content and its main rhetorical features.

This section allows readers to gain a clear view of the digital environment in which hate speech spreads and how it circulates among various actors—helping researchers and policymakers understand the real structure of this discourse and its impact on public opinion and social reality.

Tiktok:

X (Twitter):

Recommendations and Proposed Policies

Based on the results of monitoring and analysis presented in this issue, the Safe Voice program recommends a set of practical measures and policies aimed at reducing the spread of hate speech and promoting responsible and safe use of the digital space in Sudan and the region.

1. Strengthening Continuous Monitoring and Documentation

-

Expand monitoring to include new categories of accounts and digital influencers.

-

Develop monitoring tools using AI and machine learning for faster, more accurate tracking.

-

Publish periodic reports showing monthly shifts in the nature and direction of discourse.

2. Activating Digital Reporting and Accountability

-

Create a joint mechanism among civil society organizations to systematically report inciting content to platform administrators, supported by evidence.

-

Urge social media companies to adopt rapid response mechanisms for incitement or racist content.

-

Advocate for greater transparency in content moderation and removal policies.

3. Legal Advocacy and National Policy Development

-

Collaborate with legal networks and human rights organizations to pursue judicial accountability for accounts or entities promoting violence or discrimination.

-

Support the adoption of a comprehensive national law against hate speech that balances freedom of expression with social responsibility.

-

Encourage government agencies to integrate hate-speech prevention into national digital security and social peace strategies.

4. Counter-Messaging Campaigns

-

Launch digital media campaigns featuring local influencers and youth creatives to dismantle misleading narratives and promote coexistence and equal citizenship.

-

Produce positive alternative content offering realistic and human stories of groups targeted by hate speech.

-

Encourage cooperation between journalists and civic initiatives to design “rapid response” tools for rumors and inciting content.

5. Collaboration with Civil Society and Academia

-

Build a coordination network among organizations working in human rights, peace, and technology to exchange data and expertise.

-

Support universities and research centers in conducting quantitative and qualitative studies on hate speech and its impact on social peace.

-

Integrate “digital peace” training into university media and education programs.

6. Balancing Freedom and Responsibility

-

Emphasize that countering hate speech does not mean suppressing freedom of expression, but regulating it to protect individuals and communities from harm.

-

Promote a digital culture that distinguishes legitimate criticism from hostile speech.

-

Encourage users to consciously exercise their digital rights and report violations instead of amplifying them.

These recommendations form an initial roadmap toward a fairer and safer digital environment that recognizes freedom of opinion as a fundamental right while rejecting its misuse as a pretext for spreading hatred or inciting violence. Through these policies, the Safe Voice program aims to turn monitoring and analysis into effective tools for prevention, dialogue, and accountability.

Conclusion

The findings of this issue of “Safe Voice – Accounts Under the Microscope” reveal that hate speech in the digital space remains a serious and evolving challenge that goes beyond political or tribal disagreements to affect the essence of social coexistence and national identity.

The analysis shows that hostile discourse is no longer expressed only through insults or violent language but has taken more complex forms—from sarcastic symbolism to misleading narratives—that fuel division and reproduce stereotypes about “the other.”

In response, the bulletin emphasizes that combating hate speech is not the responsibility of a single actor. It requires a collective effort that combines community awareness, legal intervention, media monitoring, and digital accountability—ultimately fostering a culture rooted in understanding digital rights and responsibilities.

Building a safe digital environment is not achieved merely by removing content but by developing critical awareness capable of analyzing discourse, understanding its roots, deconstructing its narratives, and confronting its effects through knowledge and dialogue.

The primary goal of the Safe Voice program remains to transform monitoring and analysis into a positive force for change that protects individuals, enhances digital justice, and supports the transition toward a society that respects diversity and upholds human dignity.

Finally, the bulletin reaffirms that words carry responsibility, and that discourse can be either a bridge for peace or fuel for hatred—and the choice between them determines the shape of the future we build together.